Unlocking the power of larger context windows

This is a follow-up to Building a Reddit Thread Summarizer With ChatGPT API. See that article for some context and information about how I built the app’s first version.

June 13th, 2023

OpenAI has rolled out several exciting updates that improve the capabilities of its GPT models while making them more cost-effective. Among the key changes are the introduction of a new function calling capability in the Chat Completions API, updated and more steerable versions of gpt-4 and gpt-3.5-turbo, and a new 16k context version of gpt-3.5-turbo, offering four times the context length of the standard 4k version.

The function calling feature allows developers to create more interactive applications by connecting GPT’s capabilities with external tools and APIs. It can convert natural language into function calls and API calls, extract structured data from text, and interact with databases using natural language.

Significant cost reductions have also been announced. Thanks to increasing system efficiencies, the cost of the popular text-embedding-ada-002 embedding model is reduced by 75%. gpt-3.5-turbo, the most popular chat model, also sees a 25% cost reduction for its input tokens.

Reddit GPT Summarizer has been updated to support the new models.

Introduction

In the sprawling, constantly evolving landscape of digital discussion platforms, Reddit is a vast repository of thoughts, debates, and narratives. These threads can often consist of hundreds, if not thousands, of comments, presenting a daunting challenge for those seeking to extract the most salient points or overarching themes. To tackle this issue, I developed an application called the Reddit GPT Summarizer, designed to parse through the complexity and deliver coherent, concise summaries of Reddit threads. However, the dynamic nature of technology and AI necessitates continuous innovation.

The key enhancements revolve around the integration of advanced language models from two pioneering companies in the field of artificial intelligence: OpenAI and Anthropic. Initially, the Reddit Summarizer was powered by just ChatGPT. While competent in dealing with shorter threads, ChatGPT’s original limitation of GPT-4’s 8,192 token context window— posed challenges for effectively summarizing longer discussions. OpenAI has just released a new GPT-3.5 16k model. GPT-4 32k (32,768 tokens) is coming soon and already available to select users and through Azure business accounts.

Anthropic’s new Claude model boasts a staggering 100,000 token context window (the largest window available today), a feature that has the potential to revolutionize the Reddit Summarizer’s performance. These enhancements allow the application to delve deeper into extensive threads, understand relationships and themes across a larger textual canvas, and generate comprehensive summaries.

The Reddit Summarizer is now equipped to handle a wider spectrum of Reddit content, producing summaries that are noticeably more coherent and informative. I look forward to the continued experimentation with these language models and the potential enhancements they can bring.

This article will take you through the evolution of the Reddit Summarizer, shedding light on the capabilities of the language models employed, their impact on the application’s performance, and the broader landscape of AI development. We will explore the competitive dynamics between the models, each with its unique offerings and strengths, and how leveraging a blend of these models can lead to powerful applications. We’ll also touch upon recent Reddit API pricing controversies. Let’s dive in!

Reddit GPT Summarizer

Reddit GPT Summarizer is an application written in Python (3.9+). It uses the Streamlit (open-source Python library that makes it easy to create web apps for machine learning and data science), PRAW: the Python Reddit API wrapper. It has connectors for OpenAI and Anthropic completions.

The summarizer is designed to be simple and easy to learn, it doesn’t use helper apps like LangChain or LLamaIndex, so it’s relatively lightweight. The interface controls a multi-pass summarization algorithm that parses results in chunks and feeds results back into the next pass. It should be easy to fork and adapt to other LLM applications.

Updates

Reddit Summarizer has been updated to leverage Anthropic’s Claude model, which now offers a 100k context window. This lives alongside GPT-4 as well as older OpenAI’s GPT-3 instruct models like text-davinci-002 and text-davinci-003. Support has also been added for the new gpt-3.5-turbo-16k model. The updated summarizer can now handle much longer Reddit threads and generate more comprehensive summaries by using larger context windows.

The original summarizer used the ChatGPT API to generate summaries of Reddit threads. While ChatGPT works well for short to mid-length threads, its limited context window of around 8k tokens makes it difficult to summarize long threads accurately. The older GPT-3 instruct models are provided to compare performance. Some work terribly (Ada, Babbage, Curie), but using multi-pass summarization text-davinci-003 remarkably often outperforms ChatGPT. Give it a try!

Here is a video overview of the new updates:

Here is a look at the updated interface:

You can see there are now options to select the model type. In addition to the existing ChatGPT models, you can now use the older instruct models, the new 16k model, and all of the Claude models. Each model has a preset association in the config, which now updates the default chunk length, number of summaries, and max token length. You can find the new model presets in reddit-gpt-summarizer/app/config.py.

Note: these should be updated as necessary. Older models are often deprecated.

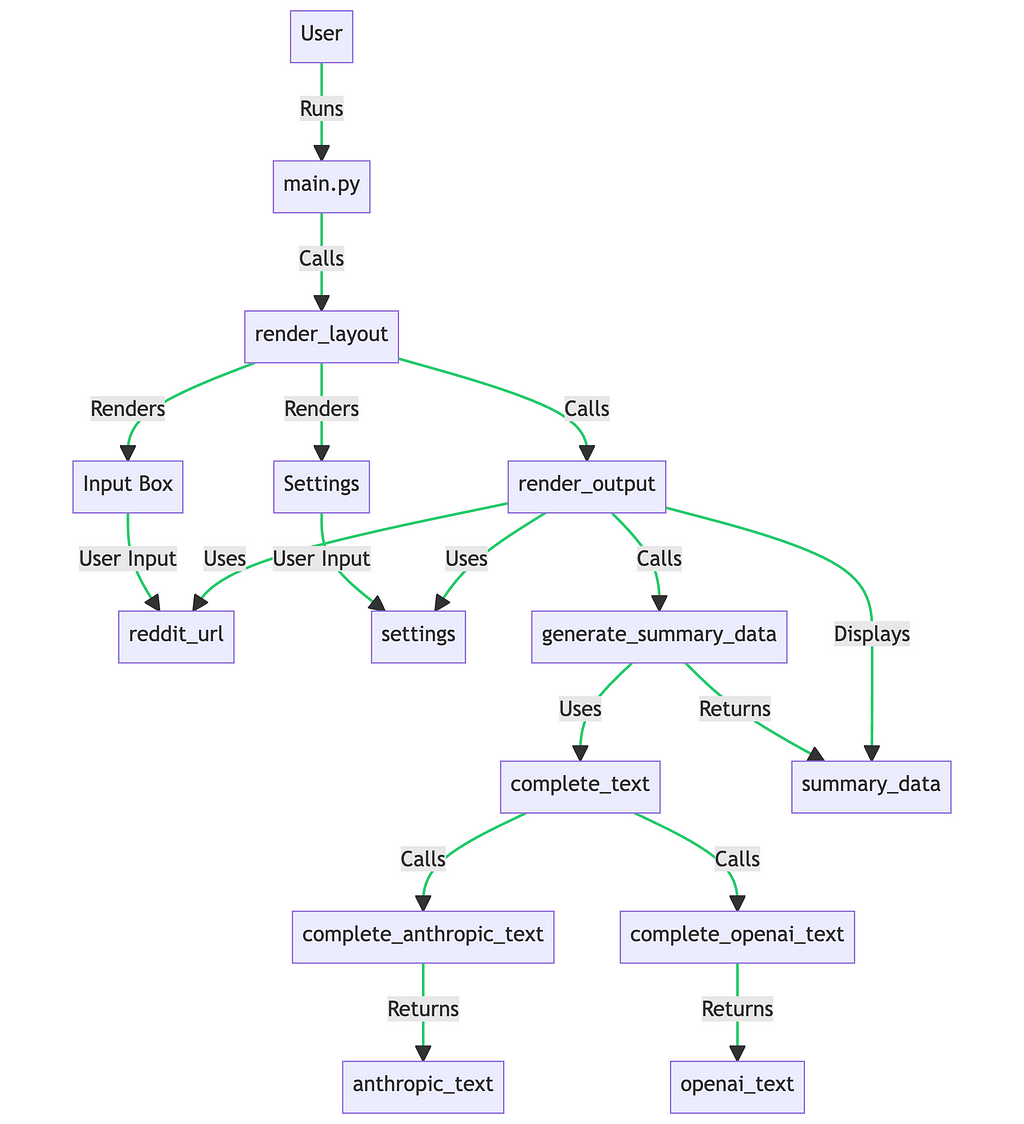

Here is a quick overview of the process flow:

June 13th, 2023: Updated table of models and their respective window size/rough number of words. Note: How tokens are counted can vary significantly depending on the language model and the vendor. The specifics of how each vendor counts their tokens could be subject to proprietary considerations, and the exact methodologies may not always be publicly available.

The larger context windows have significant benefits for the Reddit Summarizer. Threads with hundreds or even thousands of comments can now be summarized coherently, with the models incorporating information from across the entire thread. The summarizer can perform multi-pass summarization, where it summarizes a portion of the thread, then feeds that summary back into the model along with more of the thread to generate another high-level summary.

This recursive process results in a summary capturing the essence of extremely long and complex threads. Larger context windows require fewer passes. The Claude 100k model can consume most Reddit threads in a single pass, but you can sometimes get better results from multiple passes.

API Pricing Controversy

The recent changes to Reddit’s API policies and pricing have sparked widespread controversy and concern, notably among developers of third-party apps and large language models (LLMs). With the new policies, Reddit plans to introduce a pricing model for its API, potentially charging as much as $12,000 per 50 million requests. As a result, several beloved Reddit browsing apps like Apollo, rif is fun for Reddit, ReddPlanet, and Sync have announced they will shut down due to the increased operating costs.

Reddit experienced temporary disruptions on June 13th-14th, 2023, as thousands of volunteer moderators protested new policies set by the company that runs the site. The protesters argue the policies will make moderating subreddits, the distinct communities found on Reddit, significantly more difficult, especially for moderators who rely on third-party apps and tools.

The implications for AI technologies that rely on Reddit’s data, like LLM-based Reddit summarizers, could be significant. Reddit’s API has traditionally been a rich data source for training AI models. However, under the new terms, commercial usage, such as training LLMs, will require a separate agreement with Reddit, the cost of which remains undisclosed. This could potentially drive up the costs of operating these models, affecting the availability or pricing of services that rely on them.

Reddit GPT Summarizer is designed for personal usage and experimentation and should be minimally impacted.

Reddit’s move to monetize its API is a response to the growing recognition of the value of its user-generated content. As more AI platforms emerge, Reddit aims to capitalize on the value of its data corpus, which is used to train AI models. Reddit CEO Steve Huffman has argued that the platform’s corpus of data is highly valuable and that Reddit doesn’t need to give all of that value to some of the largest companies in the world for free.

Nevertheless, the changes have sparked backlash from the Reddit community, leading to major communities planning to “go dark” in protest. Furthermore, developers have expressed concerns about the new policies’ lack of clarity, as Reddit has not provided precise details about how the changes will affect third-party apps or how API usage limits will be enforced.

If the costs associated with accessing Reddit’s API increase substantially, those costs may be passed on to users, making the services less accessible. On the other hand, Reddit plans to keep the API free for some use cases, such as those who build moderation tools or use Reddit in educational and research environments. Therefore, the specific implications for how you use Reddit summarizer will likely depend on the terms of any agreements you enter into with Reddit.

Anthropic

Anthropic is an AI startup based in San Francisco, founded by former senior members of OpenAI. It has developed an AI language model called Claude. Claude is similar to GPT-4 but has a larger context window. While GPT-4, when used as part of ChatGPT, has 8,192 (or 32,768 tokens for the 32k model, which is not widely available), Claude has a context window of 100,000 tokens or about 75,000 words. This means Claude can analyze an entire book’s worth of material in under a minute, which is a significant capability upgrade. This larger context window could potentially help businesses extract important information from multiple documents through conversational interaction.

It’s worth mentioning that while Anthropic may not be as well-known as Microsoft or Google, it has emerged as a significant rival to OpenAI in terms of competitive offerings in large language models (LLMs) and API access. The company received a $300 million investment from Google in 2022, with Google acquiring a 10% stake in the firm. Anthropic uses self-supervision techniques to align language models toward being helpful, harmless, and honest.

OpenAI and Anthropic offer different capabilities and areas of focus. Anthropic has specialized in building language models with large context windows that are robustly aligned to be helpful, harmless, and honest. OpenAI has focused more on raw performance and pushed the capabilities of language models on benchmarks and for powering applications.

Anthropic offers two versions of Claude: “Claude” and “Claude Instant.” These models are available for early access groups and commercial partners. They can be used either through a chat interface in Anthropic’s developer console or via an API, which allows developers to integrate Claude’s functionalities into their apps.

The Claude model was developed with a focus on AI safety and is trained using a technique called “Constitutional AI.” According to Anthropic, Claude is more steerable, easier to converse with, and less likely to produce harmful outputs than other AI chatbots while maintaining high reliability and predictability.

Claude has already been integrated into several products available through partners, such as DuckAssist instant summaries from DuckDuckGo, a portion of Notion AI, and an AI chat app called Poe that was created by Quora.

The pricing for using Claude is charged per million characters input prompt and completion output. Claude Instant is currently available with pay-as-you-go pricing for $1.63/ million tokens as prompt input and $5.51/ million tokens for completion output. “Claude-v1,” the larger model, is priced at $11.02/ million tokens characters input and $32.68/ million tokens characters output.

Anthropic provide a Python SDK for accessing their API. The connector implementation in the summarizer is quite simple:

One important change is how the number of tokens is counted. We have to check for the vendor in the num_tokens_from_string function, not a big change:

Results

Please see the addendum at the end for a sampling of outputs from each of the referenced models:

- The new 16k ChatGPT 3.5 can consume 4x more context, which is very useful. Most folks don’t have access to GPT-4 32k, so this is the largest accessible model from OpenAI. The new pricing is very competitive.

- Claude V1 Instant sometimes misunderstood the directive, instead choosing to review the article rather than summarize it.

- Using very large chunk sizes on the Anthropic 100k models (e.g., above 50k) resulted in subjectively worse summaries with weird formatting and artifacts, but two passes at 50k each is coherent. I don’t know if this is because the context puts the model under pressure, but smaller sample sizes with multiple passes still worked best.

- Using 100k, you are able to get effective summaries in a single pass, which I’ve found useful for summarization information very quickly.

- Great results with the text-davinci-003 is essentially the base model for ChatGPT that has not been fine tuned with HRLF. Just goes to show that an instruct model can still be useful. It may be possible to get similar results with GPT-4 by tweaking the instruction and system role. text-davinci-003 gave the most comprehensive/longest results.

- ChatGPT 3.5 turbo and GPT-4 8k prefer concise results, but the newer 0613 variants are more comprehensive.

- Anthropic models were less concise but comparable in quality (most of the time).

- Anthropic models seemed to generate more variation between passes, whereas the OpenAI ChatGPT results were more consistent.

- GPT-4 seemed to be the best at following the instructions exactly, it consistently used markdown and provided links with references in the comments. GPT-4 did a good job of quoting users, whereas smaller models wrote higher-level summaries.

- All of the models compared had their strengths and weaknesses; it is still best to sample many times from different models. That being said, if you’re looking to get a summary of a large thread quickly, the 100k models by Anthropic perform well.

- GPT-4 32k is really expensive.

The Future

There have been many recent advancements that should enable ever-larger context windows. Magic Labs announced a prototype of a neural network architecture called LTM-1, which claims to support an incredible 5 million tokens. Larger context windows are on OpenAI’s roadmap.

Meta AI published a paper for a new framework called MEGABYTE. Allows 1 million bytes of data without tokenization/ text documents containing 750,000 words — a 3,025% increase over GPT-4. It has been called “promising” by OpenAI’s founding member Andrej Karpathy.

Conclusion

While the additional capabilities do come at the cost of higher pricing for some models, I’ve found the performance gains well worth it. The Reddit Summarizer summaries are coherent and informative. I’m excited to continue experimenting with these powerful new language models and see what other enhancements I can build into the Reddit Summarizer.

Pricing changes at Reddit impact the ability to use these summarization tools at scale in commercial applications, which requires agreement with Reddit terms of service.

I found benefits to leveraging models from both companies. ChatGPT provides a good cheap general summarization ability (especially with 16k), while Claude’s large context window uniquely suits very long Reddit threads. The GPT-3 instruct models also offered another option for certain prompts. Using a combination of models from different vendors allowed me to play to each model’s strengths for various parts of the summarization process. Claude-V1 had comparable performance to ChatGPT when consuming a large number of tokens. It was hard to notice major differences in reasoning, the larger context size improved the performance, and it’s surprisingly fast. The instant variant is even faster.

As for which AI language model is superior, it depends on the specific use case. Claude’s larger context window might be more advantageous for tasks that require understanding and generating text based on a large amount of context. On the other hand, GPT-4 with multi-pass demonstrated higher performance in summarization, and GPT-4’s future ability to process images alongside text could be more beneficial in scenarios that require multimodal understanding.

Thank you for reading, and good luck with your projects!

Helpful Links

- Reddit GPT Summarizer

- Building a Reddit Thread Summarizer with ChatGPT API

- OpenAI

- OpenAI Blog: Function Calling and Other Updates

- Anthropic

- Claude

- Introducing 100k Context Windows

- Reddit Data API terms

- Apollo

- rif is fun for Reddit

- ReddPlanet

- Sync

- PRAW: the Python Reddit API wrapper

- Streamlit

- LlamaIndex

- LangChain

- Magic Labs LTM-1

- Megabyte Paper by Meta

- Meta AI

- Reddit protest updates: all the news about the API changes infuriating Redditors

- Reddit’s upcoming API changes will make AI companies pony up

Addendum

Sample outputs for qualitative comparison- I thought it would be fun to sample r/OutOfTheLoop about the recent Reddit blackout:

Chat GPT 3.5 16k, third pass

Chat GPT 3.5, third pass

Claude v1 Instant 100k, first pass

Claude V1 Instant, third pass

Claude v1 100k, first pass

Claude v1, third pass

Chat GPT 4 8k 0613, third pass

Chat GPT 4 8k, third pass

GPT text-davinci-003, third pass

Transforming Reddit Summarization With Claude 100k and GPT 16k was originally published in Better Programming on Medium, where people are continuing the conversation by highlighting and responding to this story.