My Journey To Manage Tens of Thousands of Resources Using Boto in Multiple Accounts and Regions in AWS

How I did all this while working within a 15-minute runtime limit

Why?

My job is to manage the automation of AWS service’s big users across multiple accounts and regions. When all the resources I managed needed to gather and manage their data from a single account and automation — I got overwhelmed.

God, why is it so complicated? Why use SQS? Why automation in every account? Is there a simpler solution?

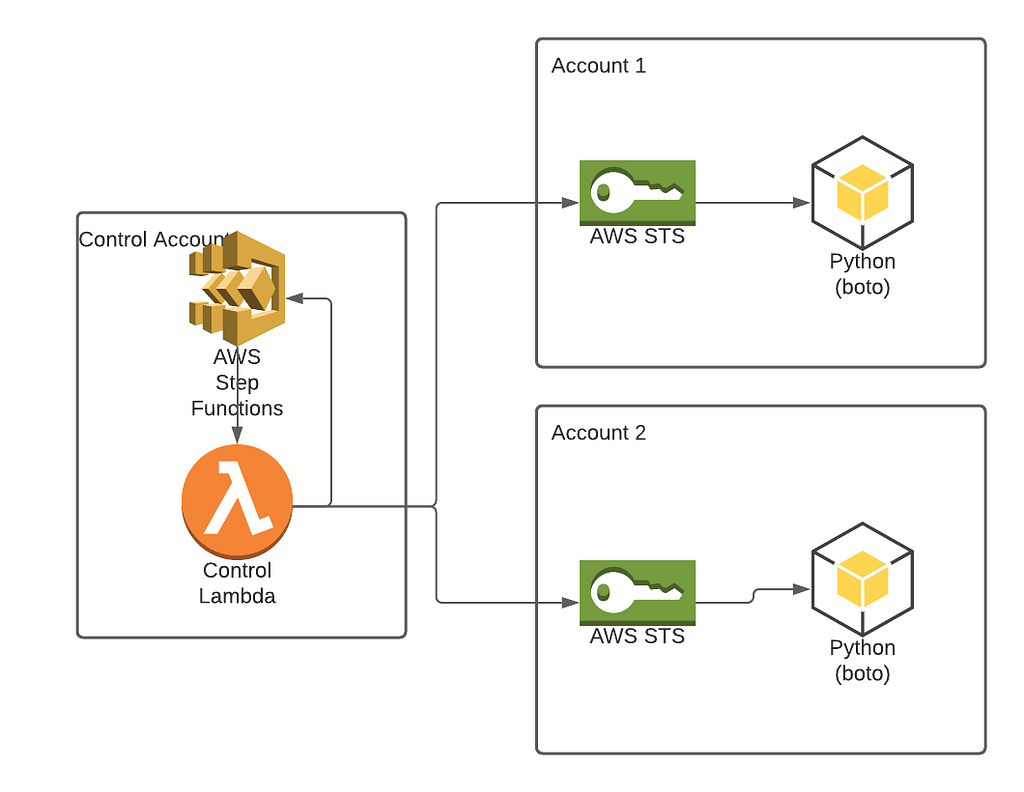

Later, I found that it is better than deploying the lambda function into every account and setting up IAM permissions for every account to access the MQ role. Yup, it’s better to deploy the STS role into target accounts and work with target accounts directly, but I am getting a little ahead of this article.

Limits

One Lambda execution 15-minute limit on its runtime. You can make it shorter but not longer than that limit. If you need to work with more data — typically multiple AWS accounts, regions, etc. and do some action that may include 10,000 EC2 instances, for example, you will definitely run over that limit.

Also, even if the limit is longer and something goes wrong, debugging this function would be extremely difficult. For that reason, decoupling is needed.

Decoupling

Let’s imagine you have a task. Create an automation that will add to every instance tag with the key “Production” and value “Yes” to 1,000 instances in each region (currently, there are 16 total) and three accounts.

Gathering information about every instance will take you one second, and adding a tag to each instance will take you one second.

So, we have 1,000 instances × 16 regions × 3 accounts × 2 seconds = 15.994 seconds => 266 minutes => 4 hours and 16 minutes.

Four hours and 16 minutes is definitely over the 15-minute limit.

Furthermore, you cannot just use a message queue alone because you need to generate a list of machines to process first, and even generating that list will take well over that 15-minute limit.

So, we need to decouple our functions first to allow us to break these limits.

Step functions

Boto3 — NextToken

When you need to list 100,000 EC2 instances, you will get limited by lambda runtime. Because the boto3 client for Python knows you can get many results, it has natural limits (usually 1MB per response) and uses the following pagination below. For each API call, you can only get a limited number of items.

import boto3

ec2 = boto3.client('ec2')

page_size = 10

response = ec2.describe_instances(MaxResults=page_size)

i=0

while True:

i=i+1

if 'NextToken' in response:

response = client.describe_instances(MaxResults=page_size,

NextToken=response['NextToken'])

else:

response = client.describe_instances(MaxResults=page_size)

# process response here

Sample code 1: This is how items are processed standardly — without decoupling

The best solution to prevent a 15-minute runtime limit is using a step function (with a one-year runtime limit) for a loop. Step functions allow you to pass event variables between function calls. It makes sense to store the last token there.

def lambda_handler(event, context):

kwargs = { "MaxResults": 10 }

if 'NextToken' in event:

kwargs = { "NextToken": event.get("NextToken"), **kwargs }

response = client.describe_instances(**kwargs)

# process response here

event["NextToken"] = response.get("NextToken", None)

event["loop"] = True if NextToken in event else False

return event

Sample code 2: This is the worker lambda, which is called by the step function

We use a step function to call worker lambda, which processes just ten items (the number of items might be whatever can be processed within the 15-minute limit) and return NextToken to the step function, which loops again.

In the retry logic of the step function, you can see there is a catch for TooManyRequestsException. It is there if you run many step functions, e.g., for each region and account, to prevent overloading.

{

"Comment": "Instance fetcher",

"StartAt": "FetchData",

"States": {

"FetchData": {

"Type": "Task",

"Resource": "arn:aws:lambda:us-east-1:xxxx:function:instance-fetcher",

"Next": "Looper",

"Retry": [ {

"ErrorEquals": [ "Lambda.TooManyRequestsException"],

"IntervalSeconds": 10,

"MaxAttempts": 6,

"BackoffRate": 2

} ]

},

"Looper": {

"Type" : "Choice",

"Choices": [

{

"Variable": "$.loop",

"BooleanEquals": true,

"Next": "FetchData"

}

],

"Default": "Success"

},

"Success": {

"Type": "Pass",

"End": true

}

}

}

Sample code 3: Step function text definition

SQS

Multiple workers

When you process some AWS resources using boto3, step functions alone might not be the best tool for your solution. It has pitfalls when you, e.g., need to process multiple resources in parallel.

This is true, especially if you are writing data to the database and must limit and queue data. For this reason, it is recommended to use the step function to gather data about resources and put them into the SQS queue, where they will be processed.

This is beneficial when you use a reserved capacity with AWS Lambda. You can limit the number of lambdas running parallel so you won’t get into deadlocks with databases if you are using it.

Reserved concurrency

Lambda functions can reserve concurrency. This functionality reserves resources and provides a cap to maximum lambda functions running simultaneously.

This is beneficial when accessing databases to prevent deadlocks during writing or use less expensive and performant databases.

You can set up a reserved concurrency on each lambda function in appropriate settings.

Multiple accounts

import botocore

import boto3

import os

ASSUME_ROLE_ARN = os.environ.get('ASSUME_ROLE_ARN', None)

def assumed_role_session(role_arn: str, base_session: botocore.session.Session = None):

base_session = base_session or boto3.session.Session()._session

fetcher = botocore.credentials.AssumeRoleCredentialFetcher(

client_creator = base_session.create_client,

source_credentials = base_session.get_credentials(),

role_arn = role_arn,

extra_args = {}

)

creds = botocore.credentials.DeferredRefreshableCredentials(

method = 'assume-role',

refresh_using = fetcher.fetch_credentials

)

botocore_session = botocore.session.Session()

botocore_session._credentials = creds

return boto3.Session(botocore_session = botocore_session)

# aws sessions

mysession = botocore.session.Session()

assumed_role = assumed_role_session(ASSUME_ROLE_ARN, mysession)

# aws clients

assumed_ec2 = assumed_role.client('ec2', region_name = 'us-east-1')

assumed_ec2.describe_instances...

Target role

Resources:

TargetRole:

Type: 'AWS::IAM::Role'

Properties:

AssumeRolePolicyDocument:

Version: 2012-10-17

Statement:

- Effect: Allow

Principal:

Service:

- lambda.amazonaws.com

AWS:

- 12345678 # Target AWS account number

Action:

- 'sts:AssumeRole'

Path: /

RoleName: TargetRole:

TargetPolicy:

Type: 'AWS::IAM::Policy'

Properties:

PolicyName: TargetPolicy

Roles:

- !Ref TargetRole

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- ec2:DescribeInstances

Resource: "*"

SourceRole

Resources:

SourceRole:

Type: 'AWS::IAM::Role'

Properties:

AssumeRolePolicyDocument:

Version: 2012-10-17

Statement:

- Effect: Allow

Principal:

Service:

- lambda.amazonaws.com

Action:

- 'sts:AssumeRole'

Path: /

RoleName: SourceRole:

SourcePolicy:

Type: 'AWS::IAM::Policy'

Properties:

PolicyName: SourcePolicy

Roles:

- !Ref SourceRole

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- ec2:DescribeInstances

Resource: "*"

Multiple regions

Calling functions in multiple regions is the easiest thing to do. You can start a step function for each region by setting the region as a parameter. When it subsequently invokes lambda functions, it will pass region as a parameter that you can use to start your boto3 client from a function.

myboto = client('stepfunctions', region_name='us-east-1')

accounts = accounts.split(",")

regions = [region['RegionName'] for region in ec2.describe_regions()['Regions']]

for account in accounts:

for region in regions:

myid = str(uuid.uuid4())[:8]

print("Starting Step Function with Arn: %s" %

myboto.start_execution(

stateMachineArn="arn-of-sm",

name=f"account-{account}-region-id-{myid}",

input="""{

"region": "%s",

"account_id": "%s"

}

""" % (

region,

account

)

)

)

My Journey To Manage Tens of Thousands of Resources Using Boto in Multiple Accounts, Regions in AWS was originally published in Better Programming on Medium, where people are continuing the conversation by highlighting and responding to this story.