After successfully making ReAct agent with Guidance, I continue to make a more complicated thing — the Generative agent. This study was published by Stanford and Google team on Arxiv on April 7, 2023. They attempt to make a small village full of generative agents. The generative agents are controlled by LLM (e.g. ChatGPT). It is “The Sims” where NPC can behave like humans. By exploiting LLMs, the agents can think, make a plan and decisions, and interact with others.

There are several implementations of this study, but I cannot find a good one that can run on local LLMs with full features. Langchain also provides a notebook implementation. It seems to work fine with ChatOpenAI but I cannot run it properly with my local Winzard-Vicuna model. The main problem is the same as my previous post where the model sometimes doesn’t follow the template. Moreover, the implementation still lacks some features: making plans, normalizing retrieval scores, and making a full summary agent. Therefore, I decided to implement it by myself.

Overview

My goal is to make an implementation closest to the paper as much as possible. Still, there are possibly several parts that need to be improved. I hope my implementation can be helpful to those who want to play with generative agents. The code is easy to plug into virtual environments to play with.

The code can be found in my GitHub Repository. I used Guidance to make prompts while the memory stream is based on Langchain Time Weighted VectoreStore. The code is based on Langchain Generative Agent. My agent has all features of the original paper:

- Memory and Retrieval

- Reflection

- Planning (need to improve)

- Reacting and re-planning

- Dialogue generation (need to improve)

- Agent summary

- Interview

So, there are two main parts of generative agents: memory and logical components (Planning, Reflection, Reacting, etc.).

Memory is the one receiving information from the environment, storing it as a list. Each piece of memory includes the content, time, and importance score. For example:

Document(page_content='Sam is a Ph.D student', metadata={'importance': 7, 'created_at': datetime.datetime(2023, 6, 1, 21, 37, 5, 793909), 'last_accessed_at': datetime.datetime(2023, 6, 1, 21, 40, 24, 610502), 'buffer_idx': 0})

To make the memory stream, I implement TimeWeightedVectorStoreRetrieverModified based on the VectorStoreRetriever from Langchain.

The logical components receive useful information from the memory to operate. We retrieve relevant memories by get_relevant_documents method.

def get_relevant_documents(self, query: str, current_time: Optional[Any]) -> List[Document]:

"""Return documents that are relevant to the query."""

if current_time is None:

current_time = datetime.datetime.now()

docs_and_scores = {

doc.metadata["buffer_idx"]: (doc, self.default_salience)

for doc in self.memory_stream[-self.k :]

}

# If a doc is considered salient, update the salience score

docs_and_scores.update(self.get_salient_docs(query))

rescored_docs = [

(doc, self._get_combined_score_list(doc, relevance, current_time))

for doc, relevance in docs_and_scores.values()

]

score_array = [b for a,b in rescored_docs]

score_array_np = np.array(score_array)

delta_np = score_array_np.max(axis=0)-score_array_np.min(axis=0)

delta_np = np.where(delta_np == 0, 1, delta_np)

x_norm = (score_array_np-score_array_np.min(axis=0))/delta_np

# Weight time and importance score less

x_norm[:,0] = x_norm[:,0]*0.15

x_norm[:,1] = x_norm[:,1]*0.15

x_norm_sum = x_norm.sum(axis=1)

rescored_docs = [

(doc, score)

for (doc, _), score in zip(rescored_docs,x_norm_sum)

]

rescored_docs.sort(key=lambda x: x[1], reverse=True)

result = []

# Ensure frequently accessed memories aren't forgotten

for doc, _ in rescored_docs[: self.k]:

# TODO: Update vector store doc once `update` method is exposed.

buffered_doc = self.memory_stream[doc.metadata["buffer_idx"]]

buffered_doc.metadata["last_accessed_at"] = current_time

result.append(buffered_doc)

return result

It gets relevant documents (memories) with a query inself.get_salient_docs(query). Then, sort them by retrieval scores. In my implementation, I use smaller weights of the recency and importance score less than the relevance score (feel free to change it):

# Weight time and importance score less

x_norm[:,0] = x_norm[:,0]*0.15

x_norm[:,1] = x_norm[:,1]*0.15

The other logical components are Planning, Reflection, Summary, Reaction, Re-planning, and Interview. I try to follow the original instruction to create these parts. For example, the agent summary part:

def get_summary(self, force_refresh=False, now=None):

current_time = self.get_current_time() if now is None else now

since_refresh = (current_time - self.last_refreshed).seconds

if (

not self.summary

or since_refresh >= self.summary_refresh_seconds

or force_refresh

):

core_characteristics = self._run_characteristics()

daily_occupation = self._run_occupation()

feeling = self._run_feeling()

description = core_characteristics + '. ' + daily_occupation + '. ' + feeling

self.summary = (f"Name: {self.name} (age: {self.age})" + f"nSummary: {description}")

self.last_refreshed = current_time

return self.summary

I follow Appendix A to ask three queries: “[name]’s core characteristics”, “[name]’s current daily occupation”, and “[name’s] feeling about his recent progress in life”. Then, summarize that information to give the agent summary. Here is the one for core characteristics (using Guidance):

PROMPT_CHARACTERISTICS = """### Instruction:

{{statements}}

### Input:

How would one describe {{name}}’s core characteristics given the following statements?

### Response:

Based on the given statements, {{gen 'res' stop='\n'}}"""

def _run_characteristics(self,):

docs = self.retriever.get_relevant_documents(self.name + "'s core characteristics", self.get_current_time())

statements = get_text_from_docs(docs, include_time = False)

prompt = self.guidance(PROMPT_CHARACTERISTICS, silent=self.silent)

result = prompt(statements=statements, name=self.name)

return result['res']

Let’s start

Okay, let’s make a generative agent — Sam. We can define some initial memories for him to start with:

description = "Sam is a Ph.D student, his major is CS;Sam likes computer;Sam lives with his friend, Bob;Sam's farther is a doctor;Sam has a dog, named Max"

sam = GenerativeAgent(guidance=guidance,

name='Sam',

age=23,

des=description,

trails='funny, like football, play CSGO',

embeddings_model=embeddings_model)

According to the description, Sam is a Ph.D. student, who lives with his friend, Bob. He has a dog — Max. Let’s set the clock to 7:25 and add more memories.

now = datetime.now()

new_time = now.replace(hour=7, minute=25)

sam.set_current_time(new_time)

sam_observations = [

"Sam wake up in the morning",

"Sam feels tired because of playing games",

"Sam has a assignment of AI course",

"Sam see Max is sick",

"Bob say hello to Sam",

"Bob leave the room",

"Sam say goodbye to Bob",

]

sam.add_memories(sam_observations)

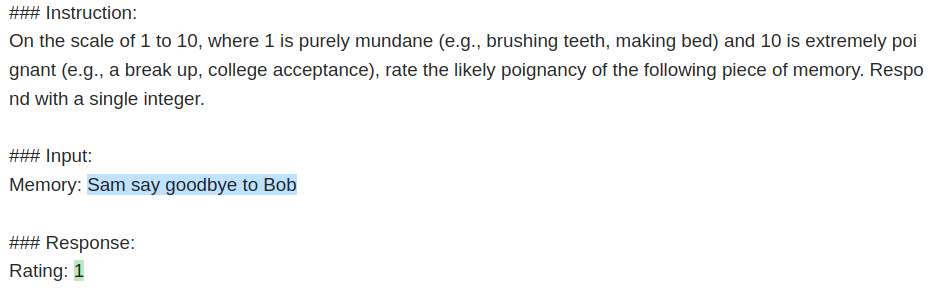

As you see, for each memory, we evaluate its importance score and store it in the memory stream. Okay, now make the summary of Sam:

summary = sam.get_summary(force_refresh=True)

print(summary)

That looks fine. Let’s test how he makes a plan for tonight.

new_time = now.replace(hour=18, minute=15)

sam.set_current_time(new_time)

status = sam.update_status()

That is quite a plan for an IT student on Friday night. Now, find out how he reacts to an observation that reminds him about his sick dog:

bool_react, reaction, context = sam.react(observation='The dog bowl is empty',

observed_entity='Dog bowl',

entity_status='The dog bowl is empty')

Seeing the empty dog bowl, his reaction is reasonable. And also, Sam changes his plan to:

Next, we will let Sam interact with other agents. So, we define his friend Bob:

description = "Bob is a Ph.D student; His major is Art; He likes reading; He lives with his friend, Sam; He likes playing Minecraft"

bob = GenerativeAgent(guidance=guidance,

name='Bob',

age=23,

des=description,

trails='like pizza, frinedly, lazy',

embeddings_model=embeddings_model)

Now, the situation is Sam sees Bob come back with a new PC and Bob is trying to set up his new PC:

bool_react, reaction, context = sam.react(observation='Bob come room with a new PC',

observed_entity=bob,

entity_status='Bob is setting up his new PC')

Oh, Sam decides to talk with Bob about his new PC. He also changes his plan. Let’s check the dialogue:

Now, we add more memories and ask Sam some questions.

sam_observations = [

"Sam call his farther to ask about work",

"Sam try to finish his assignment",

"Sam remember his friend ask him for playing game",

"Sam wake up, feel tired",

"Sam have breakfast with Bob",

"Sam look for a intership",

"The big concert will take place in his school today",

"Sam's bike is broken",

"Sam go to school quickly",

"Sam find a good internship",

"Sam make a plan to prepare his father birthday"

]

sam.add_memories(sam_observations)

summary = sam.get_summary(force_refresh=True)

status = sam.update_status()

Okay, first question:

response = sam.intereview('Friend', 'Give an introduction of yourself.')

How about his friend?

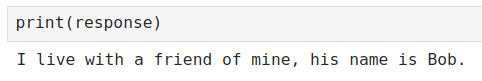

response = sam.intereview('Friend', 'Who do you live with?')

And finally, his dog — Max:

response = sam.intereview('Friend', 'Who is Max?')

As you can see, Sam can act and answer those questions quite well. You can add more agents and set up an environment based on this. I believe it would be so much fun.

Implementing Generative Agent With Local LLM, Guidance, and Langchain was originally published in Better Programming on Medium, where people are continuing the conversation by highlighting and responding to this story.