And the steps you can use to do it yourself with no code

I was introduced to an alcohol distribution business that was manually processing over 30,000 orders placed via email annually. Here’s how I used AgentHub to automate the ordering process with LLMs and the steps to do it yourself with no code.

The Problem

On any given day, this company’s inbox is flooded with emails from liquor stores or restaurants placing bulk alcohol orders. Each email is its own time-consuming puzzle. Does the email include their customer ID? Did they bother to list the item ID for any of the SKUs? Are the items in stock? Should a discount be applied?

A team of humans operating the order desk has to ask themselves these questions for each email. Any missing information requires combing through internal PDFs to fill in the blanks. They’re converting emails of varying quality to clicks in their order placement software for hours every day. On top of being extremely tedious, staffing an order desk team is expensive and acts as a bottleneck as they scale.

The Solution

I created two GPT4-powered pipelines that emulate the employees’ end-to-end workflow. An initial pipeline ingests and understands orders. The next takes action on each item.

Splitting the solution in two allows a human to sit in the middle and oversee the daily orders. This provides a sense of control while still reducing their workload by over 95%.

- Pipeline 1: Read all emails in their inbox, extract provided info, fill in the blanks using their internal PDFs and Excel sheets. Send a summary email describing each new order to the order desk operator. Every order summary comes with an “Approve” link, which triggers pipeline 2 for that order.

- Pipeline 2: This pipeline places the order in its internal system and then automatically sends a confirmation email to the customer.

For the sake of this article, I’ll be focusing on pipeline 1. This is where the real magic happens, and LLMs make a (Medium-article-worthy) difference.

How It Works

This solution was built using AgentHub, a low-code solution for creating AI pipelines. Each pipeline consists of modular components called operators. All operators are open source on GitHub with accompanying documentation here. This documentation is GPT4-generated using an AgentHub pipeline, but that is for another article.

Full disclosure, I’m one of the creators of AgentHub, so I may be biased, but I think it’s the most efficient way to automate your business with AI.

Put simply, the pipeline is a series of specific GPT4 queries saturated in a business-specific context. It consists of 26 operators. Five of these operators are AI-powered. The rest are scaffolding needed to manipulate and manage context. I’ll break it down into its three main sections.

Email reading

Step one is to process all new orders which appear in the company’s order desk inbox. All unread emails and attachments are passed into the LLM to extract the key information required to place the order.

- Gmail reader: scrapes all unread emails from your inbox and splits the body from the attachments. If attachments are present, they’re almost always PDFs containing order details.

- Ingest PDF: reads the PDF and converts its content to a nicely formatted piece of text.

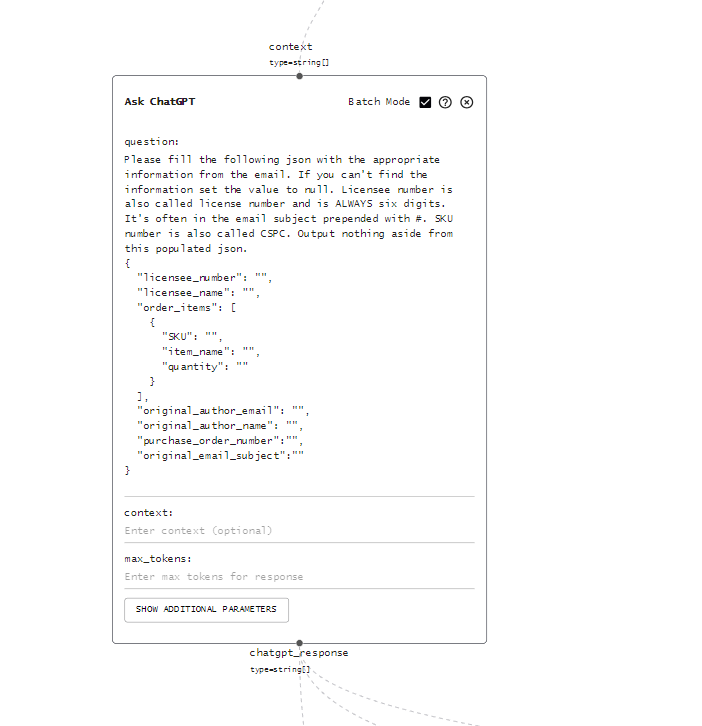

- Ask ChatGPT: This operator takes context from upstream operators and injects it into your prompt. In this case, the context is the formatted email string (with email and PDF content) and the prompt commanding the LLM to populate a predefined JSON with values from the email.

Context-saturated prompting

This is my favourite part. Once an order reaches this stage, we need to add any missing information and validate correctness just like an employee would. We use the LLM for this and leave no room for hallucinations by passing all relevant context into the prompt.

I like to compare this to asking a student about a key concept in their textbook while pointing to the exact page and paragraph that contains the answer.

The utility of the pipeline depends on the student answering correctly. Three variables contribute to the student’s success.

How clear is the question? -> How explicit is your prompt?

Are you pointing to the correct paragraph? -> How relevant is the context you’re providing?

Finally, how smart is the student? -> How powerful is your LLM?

I’m using GPT4 (might as well use the valedictorian if we can), but if your question is clear enough and you’re pointing to the most relevant paragraph, any student should be able to ace the question reliably enough to generate significant value for the business.

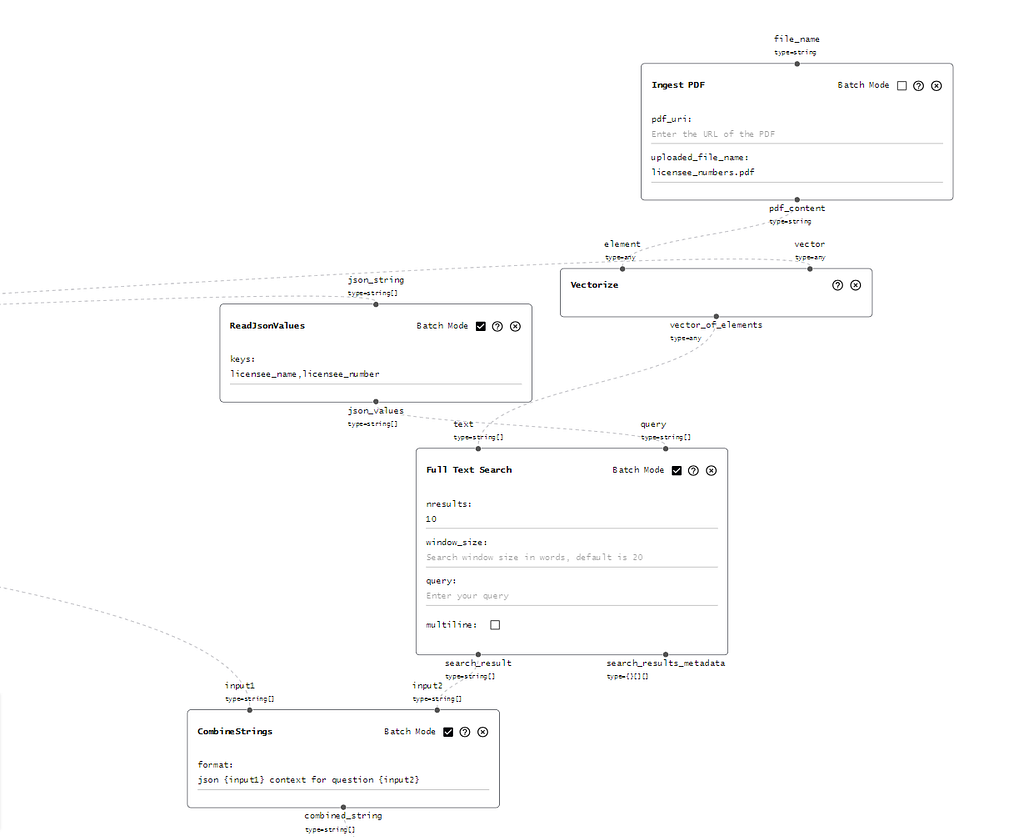

The following pipeline section illustrates one of these very specific, context-saturated prompts. I’m asking the LLM to ensure the customer name and ID match while feeding the most relevant results from a 162-page PDF of customer information.

- Full-Text Search (FTS): This is the metaphorical paragraph-pointing finger. It takes a large body of text, which in our case is a PDF, and searches through it for the excerpts most relevant to the query. In this image, our query is the customer name and the customer we currently have for the order. It’s likely we only have one of the two. The PDF lines referring to this customer will be returned as context for the LLM query.

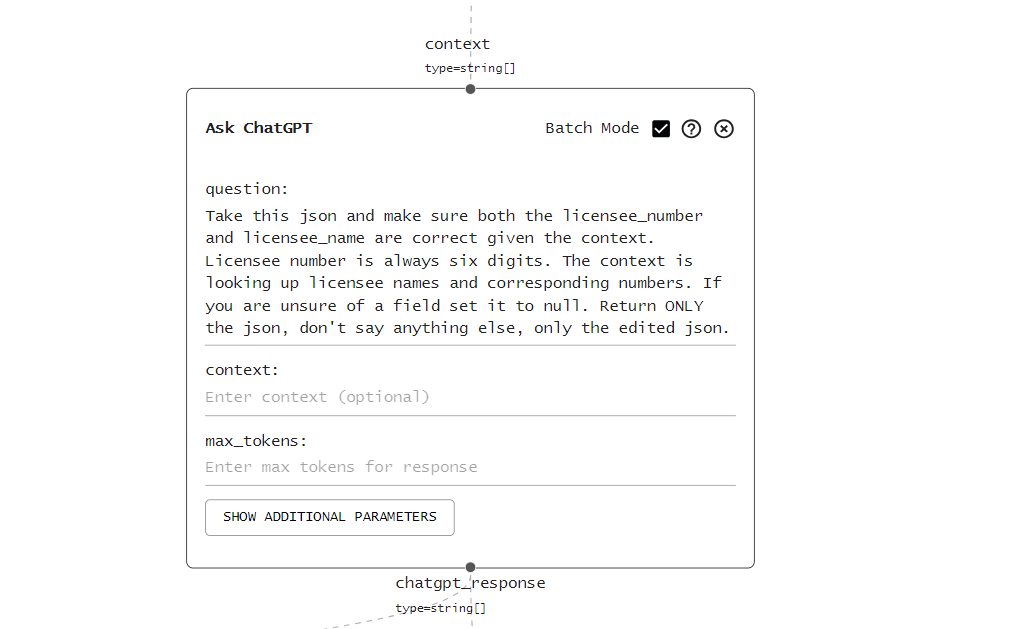

- Ask ChatGPT: Now that we have all info about the customer from our PDF search, the LLM can easily make sure the values match. Setting the values to null if it’s unsure.

We repeat this prompting pattern for every key question an employee would ask themselves while placing an order.

Summarization

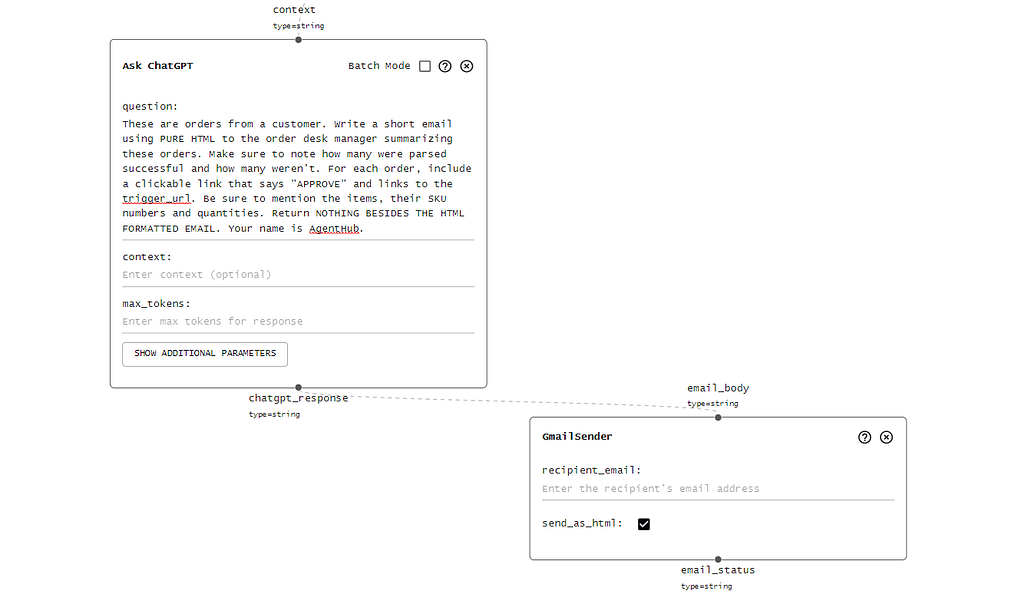

Finally, take the data from each processed order and pass it in as context so GPT4 can summarize all orders.

Ask ChatGPT: We’re asking the LLM to summarize the orders using valid HTML so the resulting email can be more dynamic and include formatted links.

Gmail sender: this operator is set up to send emails via the AgentHub notifications account. All you need to do is pass in text and decide whether you want the email sent as HTML—no passwords required.

Results

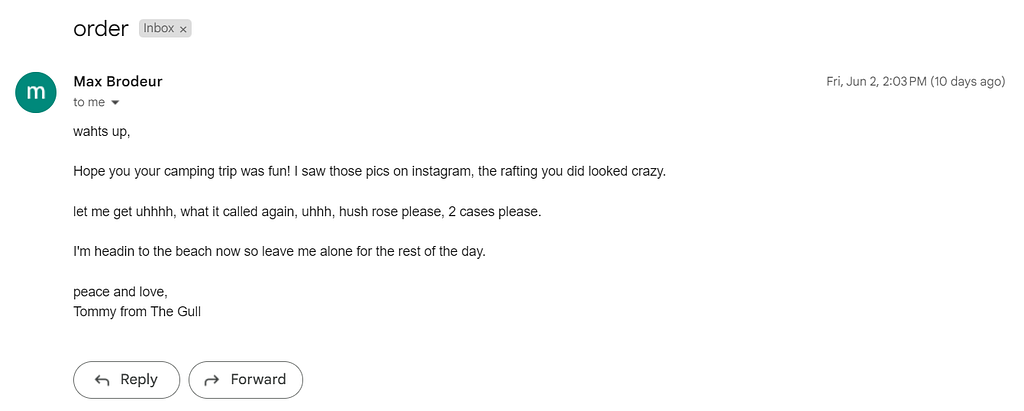

Here’s an example run for two incoming orders. The first is a standard purchase order from a customer that refers to a PDF for details. I won’t include it to avoid exposing a customer’s information. I wrote this second one to be extremely vague.

Here’s the summary email of both orders generated by the LLM. You could ask it to have a bit more personality if you wanted, but I appreciated the directness after using it for a while.

The first order was very straightforward, as the LLM could decipher all the relevant info from the order PDF. The second was tricky since it had no PDF, customer ID, or item ID. It didn’t even mention the winery that sells the “hush rose.” The LLM acted like a senior order desk employee and looked up all info needed to place the order.

- Customer ID: it used the signature “Tommy from the Gull” to infer this customer works at “The Gull.” The full-text search found this customer info in the internal PDF, and the LLM was able to assign the correct ID

- Item ID: the email requested the “hush rose.” Once again, the full-text search found all item info related to this phrase, and the LLM assigned the exact item ID.

Key Learnings

Context is all you need: The question you ask your LLM is only as strong as the context you provide alongside it. “Context Engineer” feels like a more appropriate moniker for someone trying to build useful LLM applications. Determining the most relevant context for your prompt and how to access it efficiently is the real challenge.

Familiarity with the problem outweighs technical ability: If I was contracting someone to automate a part of my business, I’d be looking for someone who knows the industry over the best engineer.

While building, the only orders my pipelines would fail were when I would have also failed to understand the order. Details, like knowing a SKU number can be called a CSPC number, among many other important morsels of knowledge, were learned by immersing myself in the problem.

The more time I spent with the customer, the better the solution. Making improvements to the pipeline was trivial since almost all tweaks were to the natural language prompts. Additionally, if I ever needed to add features to an operator, it was only a ChatGPT prompt away.

How To Build Your Own Automation

You can visit AgentHub and build your own automation right now. All you need is an OpenAI API key and a basic understanding of LLMs (if any).

For inspiration, here’s a pipeline that automates my Twitter account 🐦 (an article I wrote explaining it) and another that generates our open source documentation using GPT4.

The exact operator you need for your pipeline may not exist just yet. We’d highly encourage you to contribute your own here or join the Discord if you need our help to make it a reality.

I’d love to hear about any automation you’re trying to build and lend a helping hand if needed. Feel free to reach out to me (max@agenthub.dev) or my co-founder Misha (misha@agenthub.dev) anytime.

Thanks for reading 👋

How I Automated a Business’ Order Fulfillment Process With LLMs was originally published in Better Programming on Medium, where people are continuing the conversation by highlighting and responding to this story.