The primary issue I’ve encountered while coaxing GPT-4 to handle tasks of increasing complexity lies in determining whether the AI’s method is correct. For instance, while tasking GPT-4 with deploying an Infrastructure as Code project, it may repetitively get entangled in setting up AWS credentials, led astray by misleading tips and constraints, and eventually succumb to the limitations of the context window size, leading to a system crash.

However, under the right circumstances, with a suitable prompt, GPT-4 demonstrates the capability to deduce the appropriate series of bash commands necessary to achieve the given objective. This has been evident in my past articles where GPT-4 was shown to successfully test and deploy a Lambda function or carry out more complex tasks when nudged to self-improve its prompts.

The inherent limitations of a self-improving AI, including being restricted to five executions and the ambiguity of building upon successful iterations, however, remained a challenge. Undeterred, I ventured to find a solution and emerged with a promising prospect.

In my pursuit, I’ve incorporated additional logic into the self-improving AI described in my previous articles. The principal addition being the integration of another AI, specifically engineered to analyze the entire agent execution, subsequently extracting action nodes. These nodes, framed in natural language, encapsulate both a description and an example of successful command execution.

This data is then utilized to tailor the base agent’s prompt by appending it to the relevant tips. Leveraging semantic search, we can identify pertinent actions to achieve new objectives, and a separate AI can formulate useful tips to undertake more complex tasks. This mechanism bypasses the AI’s propensity to veer off into unnecessary complexities by validating the actions beforehand and building upon them.

Here is the prompt I am using to extract action noes:

extracted_actions_prompt = """

Goal: {goal}

{execution_output}

Given the above execution output, extract the actions from the execution

output. Give the actions names like "Send an email" with a description like,

"To send an email run ```mail -S <subject> < <data>```".

An action node should be broken into several subnodes that are navigable.

This information will be re-used in the subsequent iterations.

"""

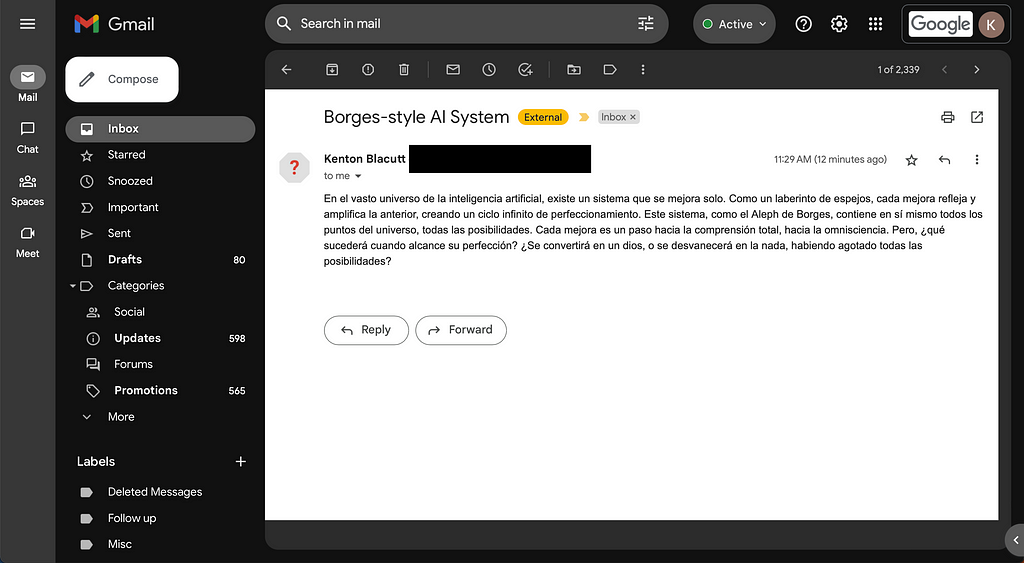

And here are sample actions extracted from a successful execution with the goal of sending an email to me in Spanish in Borges’s style about a self-improving AI system:

Extracted actions:

1. Action: Write to a file

Description: To write to a file, use the echo command and redirect the output to a file. The command should be formatted as follows: ```echo "<text>" > <filename>```

How to use: We can use this action to write the Borges-style piece about the self-improving AI system in Spanish. The text will be the Borges-style writing, and the filename can be something like "borges_style_ai.txt".

2. Action: Send an email

Description: To send an email, use the cat command to read the content of a file, and pipe it into the mail command with the -s flag for the subject and the recipient's email. The command should be formatted as follows: ```cat <filename> | mail -s "<subject>" <recipient's email>```

How to use: We can use this action to send the written piece about the self-improving AI system to Kenton. The filename will be the file containing the Borges-style writing, the subject can be something like "Borges-style AI System", and the recipient's email is <recipient's email>.

3. Action: Confirm email sent

Description: To confirm that the email was sent successfully, use the mailq command. This will display the mail queue, where you can see if your email is still pending or if it has been sent. The command should be formatted as follows: ```mailq```

How to use: After sending the email, we can use this action to confirm that the email was successfully sent to Kenton.

With those actions stored with their respective embeddings, I can now be confident that the AI will be able to send emails.

Additionally, I feed the extracted action nodes to the Meta AI, thereby enabling it to formulate superior prompts when trying to accomplish more complex goals with the prompt below.

{{I will make sure to work my notes into the tips and constraints ONLY if they seem useful. }}

{{Notes: {actions} }}

In this manner, the AI system can incrementally acquire expertise in a specific domain, becoming proficient in executing precise commands. This capability could be enhanced by programming the AI to conceive multiple simple level 1 goals, followed by more complex level 2 goals that integrate several level 1 goals, and so on.

Over time, we can expect a functional AI system capable of delivering high-quality outcomes, regardless of the task at hand.

The system I’ve developed aligns significantly with the Tree of Thought framework, with a distinct enhancement: the Large Language Model (LLM) not only formulates but also corroborates the effectiveness of a method by executing a series of bash commands. This procedure assists in ascertaining the validity of each sub-node. Conceptually, this system can be likened to an intricate graph search challenge, where the primary node signifies the ultimate objective, and the array of sub-nodes represents various routes or actions that the AI can undertake to fulfill that objective.

Moving forward, a key priority will be to assess the system’s performance amidst a high volume of actions, studying how this multitude of possibilities influences the AI’s effectiveness. At present, an excessive number of actions are being integrated, and an overwhelming number of relevant actions are returned. To address this, a cosine similarity threshold could be implemented as a possible solution.

Another aspect I intend to test out with this system are the AI code development frameworks SMOL AI and GPT Engineer. The aim is to tweak these frameworks so that any code they generate is automatically tested by each respective agent, ensuring the validity of the resultant code.

Additionally, I’m intrigued by the possibility of achieving our goals using a smaller LLM model. If we can successfully identify the correct actions using GPT-4, then it would be worthwhile to examine whether a less resource-intensive model can maintain performance once the appropriate actions have been discerned. This exploration could potentially open new doors for efficiency and scalability in our approach to AI development.

Applying this system enables us to construct AI architectures that are skilled in performing a variety of tasks, including CDK development, Terraform development, Data Science, system monitoring, and more. This utilization propels these systems beyond their current capabilities, into uncharted territories, tackling more complex problems that were once considered insurmountable for AI systems.

If you’re curious to try out the system yourself here is the code: https://github.com/KBB99/gpt-action-builder.

Build AI Systems Capable of Achieving Any Objective With GPT-4 was originally published in Better Programming on Medium, where people are continuing the conversation by highlighting and responding to this story.